Data Engineering

© Sabine Haag 2021. All rights reserved

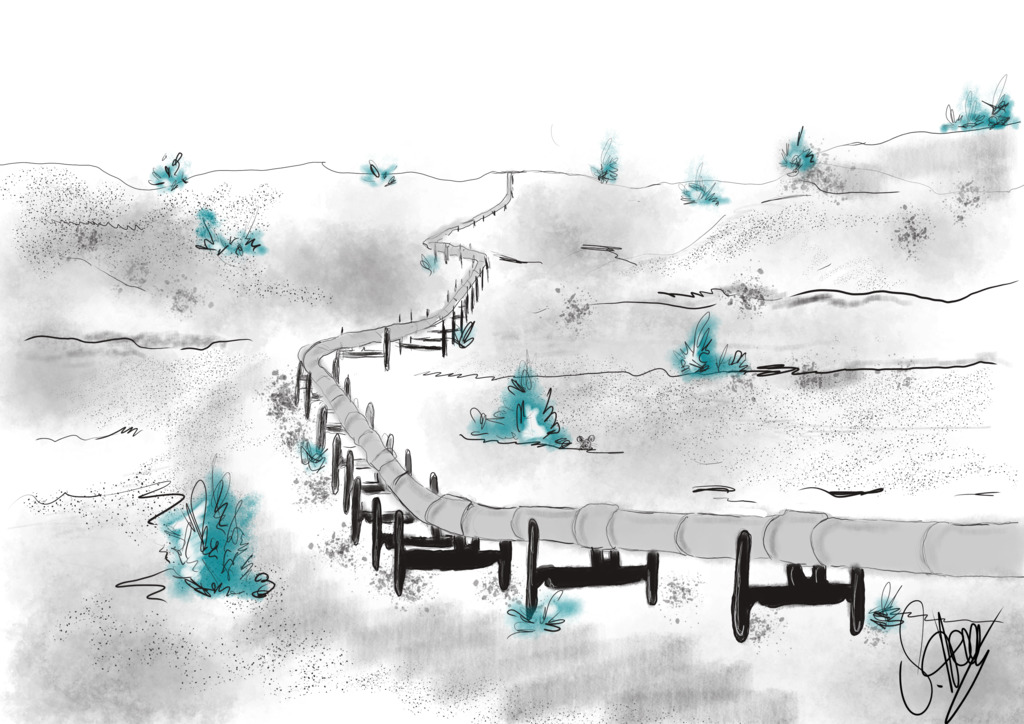

Collecting data and storing information - I have been designing ETL pipelines for more than 15 years now.

The result of an ETL pipeline is to collect data from source systems, and to provide information for different usage scenarios like

- Classical reporting

- OLAP

- Dash boards

- Interactive analysis and reporting

- Machine learning

- Data science

Between 2004 and 2015 the focus was on pipelines from relational databases into OLAP reporting.

Since 2015 the tools changed to Big Data technologies, data lakes and cloud computing. It changed to distributed computing as well: The pipeline is to run on a cluster of multiple hosts.

My first ETL project was already using a streaming approach, and normally I design all pipelines to be based on a streaming platform.

Portfolio

- Design and build ETL streaming pipelines

- Analyze and design overall processes

- Analyze exsting data sources and design data models

- Design meta-level repositories to manage lots of input sources

- Batch or streaming ETL pipelines (“near real-time”)

- System integration: Connect source systems

- Design architecture for incremental updates, even on append-only output systems like Parquet files on HDFS

- Design and implement stateless and stateful transformations

- Write output to various databases or filesystems

- Provide monitoring functionality for operators

- Tuning: Workload analysis, performance tuning

Projects

- ETL pipeline for a corporate financial reporting system

- ETL pipeline for an IT supplies online shop

- Upload interface and ETL pipeline for fares

- ETL pipeline for sustainability reporting

- ETL pipeline for insurance policy data into an Azure data lake

E - Extract

- Upload interfaces

- REST APIs using Tomcat/Jetty/JBoss or Akka HTTP

- Application-level APIs or messages, semantic messages

- Change data capture (CDC) adapters

- Kafka Connect

- Debezium

- DB logs

- Maxwell daemon

- Messaging middleware

- Kafka

- Mosquitto (MQTT) for the Internet of things (IoT)

- WebSphere MQ

- JEE JMS (JBoss MQ)

T - Transformation

- Stateless or stateful transformations

- Spark

- Kafka streams

- Akka

- Include machine learning in transformations

L - Load

Batch or streaming

- Batch systems

- Spark

- Spark standalone clusters

- Spark on YARN

- Spark on Kubernetes

- Spark

- Streaming

- Spark streaming

- Kafka connect

- Kafka streams, ksqlDB

- Akka streams, Akka

Performance tuning

- Spark partition tuning

- Kafka topic tuning

- Analyze and minimize shuffles

- Optimize queries (SQL statements)

- Optimize predicate pushdown

- Type safety vs. performance

- Spark Dataset API vs. Datafreams; Frameless, Quill

- See Optimize Spark transformations in a type-safe way

Interactive data analysis

- Jupyter/Spark notebooks

Meta-level programming - Repositories

- Dealing with a large number of input interfaces (systems, tables, attributes)

- Meta-level/generic operations like trimming of all strings in unknown data structures or number formatting, date format normalization

- Data structes unknown at compile-time

- Generate source based on the meta model

Databases and filesystems

Sources and sinks

- HDFS, S3

- Parquet, ORC, DeltaLake

- JSON, XML, CSV

- Hive

- Kudu

- Presto

- Cassandra

- PostgreSQL, Oracle, DB2, MS SQL Server

- ElasticSearch

Programming languages

- Scala, Java, Python

- SQL

- Shell scripting

Other tools

- Provide development and integration test environments

- Docker (docker-compose)

Clustering, cloud experience

- On-premise clusters

- Hadoop

- Kafka

- Spark

- Cassandra

- AWS

- Azure